TECHNOLOGY

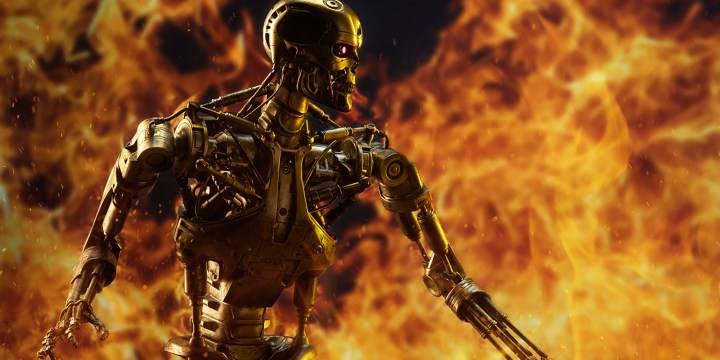

Artificial Intelligence is moving way faster than the speed of evolution. Could we be in trouble?

About half of AI researchers think there is a more than 10% chance of the worst-case scenario materialising, and this study was done before ChatGPT. I don’t know about you, but a 10% chance of the end of humanity does not make me sleep soundly.

I have been digging deep to understand the assumptions behind the “we’re-all-gonna-die” narrative about AI that very quickly showed its scary public face in the wake of the release of ChatGPT.

Read more in Daily Maverick: Artificial Intelligence and the great ethics cage fight of 2023

It was aggressively warned by much-respected AI researcher Eliezer Yudkowsky. His careful analysis of why and when (soon) this would happen was unsettling, even to a sober crowd of non-alarmists in science and tech. It was not a knee-jerk reaction – he is one of the top machine language luminaries in the world and his case is thoughtfully and logically constructed.

There was some pushback, though. Robin Hanson, another highly rated polymathic academic applied his own set of assumptions, also carefully structured and drawing on evolution, maths, psychology, politics, law and ethics, and concluded that the end of humanity under the boot of artificial intelligence was only one of the various possible outcomes of continued AI development, which optimistically included one scenario of a collaborative peaceful treaty between humans and AIs committing to not harm each other, just like competing societies have done for centuries.

Still, he reluctantly estimated our chances of AI giving us the boot at about 10% and was a bit hand-wavy on a timeline, although, when pressed, he did indicate that if it happened, it would likely happen soonish, certainly within our lifetimes. But not as soon as Yudkowsky’s predictions, which are pretty much imminent.

Then a third researcher, Paul Christiano, another much-cited smart guy, gave about a 20% chance of humanity carrying on without extinction, and reckons it will happen within 10 years or sooner, but also stated with health warnings about unpredictability.

But it gets worse. About half of AI researchers think there is an over 10% chance of the worst-case scenario materialising, and this study was done before ChatGPT. I don’t know about you, but a 10% chance of the end of humanity does not make me sleep soundly.

Most people I know, when presented with these predictions, shake their heads and roll their eyes and think (at worst) – stupid, science fiction, clueless academics. Or (at best) – surely not? I am in this camp. Admittedly not really a very optimistic stance.

Three arguments

Going deep into their respective positions and how they came to their predictions is a little beyond the scope of this article, but at the root of it lies largely, in some combination, three arguments.

The first is evolution, which (in Robin Hanson’s words) is “grabby”. The weak or poor adapters are left behind in history, while more aggressive or otherwise better-suited organisms survive and attempt to expand. Colonisers, so to speak.

The entire march of evolution is to “grab” what it can (not necessarily by intent, but by the inevitable selection process). And then he argues quite reasonably that evolution extends beyond living organisms and into societies, aliens, stars, planets. The universe. The grabbiest get the gold.

So will it be with AI.

The second argument is that AIs will quickly be able to set their own goals and objectives, perhaps as soon as this year, which will result in all sorts of other unpredictable emergent properties – including grabbiness, in the evolutionary sense. Just like everything else in the universe.

AI will try to grab as much as it can, perhaps reducing us to an irritating annoyance and an irrelevance to its self-invented goals.

And the third is the misunderstood nature of exponentiality. GPT4 is at least twice as powerful as GPT3 (much, much more, by some metrics). It took about three months to go from one to the other. If you imagine a doubling or tripling of capability or speed or data or smartness or whatever every three months, the math explodes very quickly and the numbers become unimaginably large.

An exponential increase in smartness

AIs are going to get smarter at an exponential rate. Humans get smarter very, very slowly. Pure logic takes us to supra-human intelligence very quickly.

And that is where the doomsaying arises.

And yet, I suppose this is still hard to take seriously, as we go about trying to face more immediate challenges in our lives – everything from potholes to worrying about dictators with nuclear weapons to thieves and incompetents at the helms of power.

But there is one thing that the people who are steeped in these issues are urging. The exponential rate of change in AI is fundamentally different from anything else humanity has ever seen. We have never built a technology that learns and we have never contemplated anything that can learn this fast. This thing is coming at us not at speed, but at accelerating speed. Take it seriously, they plead.

Perhaps in recent history the combined wisdom of the world regulated nuclear bombs and genetic engineering for the betterment of humankind. But with all the best intents of wise people everywhere, I do not believe we will have a consensus on this – there is simply too much to be gained by various competitors if they resist regulation. The incentives just don’t line up as clearly as they should.

And then AI is coming for us. Maybe. It depends…

(The interviews with Yudkowsky, Hanson and Christiano can be found on the excellent Bankless podcast. DM

Steven Boykey Sidley is a Professor of Practice at JBS, The University of Johannesburg.

Become an Insider

Become an Insider

The only question is whether we will survive it, and when it arrives. Whether we can do anything to stop it, even now, is unlikely – there will always be a crackpot intent on ‘saving us all’ who will push the button. We’ll hopefully be of more use than of no use, so who knows?

The arguments from “evolution” have been distorted from the start, and Darwin and Wallace both rued their introduction of the phrase “Natural Selection” – they agreed it should be replaced by “Natural Preservation”, but by that time the genie was out of the box and they gave up. Just as “Struggle for Survival” was a dramatic, poetic, but flawed expression of what natural evolution is about. In the same way, AI is not about to be struggling against humanity, or us against it.

Given the choice of an artificial limb or a natural limb, I’d only take the artificial if the natural failed – and would expect reduced functionality. Real intelligence is the issue – machines will always be at the mercy of real people, and intelligence, real intelligence, will always have the edge on artificial. The real threat is from real people with awful motives and lots of money. Just like now.

We need to get better at knowing when we’re being led by the nose.