GUEST ESSAY

We’re not all going to die – AI optimism fights back

On 6 June Internet pioneer, tech entrepreneur and venture capitalist Marc Andreessen published a 7,000-word blog post optimistically entitled ‘AI Will Save the World’. And, after having fallen prey to AI pessimism and we’re-all-gonna-die narratives of late, this was a refreshing and convincing view.

There is a nifty site on the Internet called the Pessimist’s Archive. It is a compendium of newspaper articles, some from as far back as the 19th century, warning about the dangers of whatever new technologies were showing themselves. All are amusing in hindsight, mostly written by outraged journalists. Typewriters! Radio! Cars! Air travel! Machines! The end of civilisation! Handy copies of the original and slightly faded newspaper articles are provided, with the breathless alarmism highlighted in yellow.

And of course, there is plenty about AI. The poster boy of current technology pessimism. Decried, dissed, feared and reviled – continuously for decades before ChatGPT, way back to the 1950s. And now it seems as though this froth of moral panic about AI is bound to lead to some questionable policy decisions.

But not for Marc Andreessen, from whom I learnt about the existence of the Pessimist’s Archive. For those who don’t know, he became famous while still a student for inventing the first web browser, Netscape Navigator, which burst upon a nascent web in 1995. Lore has it that it was brutally crushed by Microsoft as its threat became visible, quickly to be replaced by its own Internet Explorer.

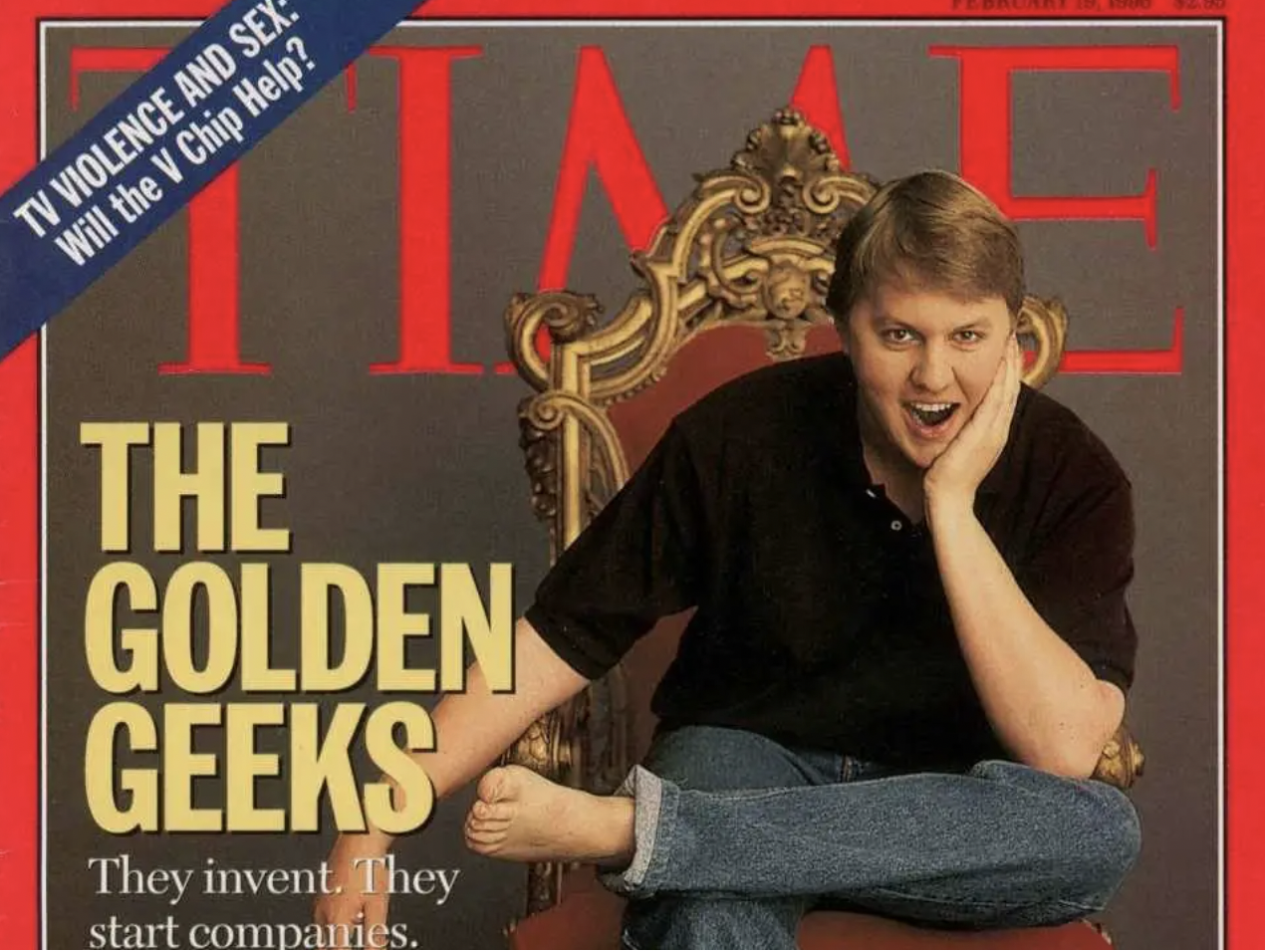

A couple of things subsequently happened to Andreessen. He made the cover of Time Magazine in 1996 at 24 years old, sitting on a throne in jeans and bare feet. The headline was “The Golden Geeks”. That made him very famous indeed; Time Magazine was rather a bigger deal in those days.

The 1996 Time Magazine cover featuring Marc Andreessen. (Photo: Supplied)

Later he went on to co-found one of the most successful venture capital companies in the world, Andreessen Horowitz, which has had a hand (and a piece of equity) in, well, just about every major tech startup in the past 15 years, including Airbnb and Lyft and Doordash and Slack and Pinterest. He then got even more famous with an 853-word essay in the Wall Street Journal titled “Software is Eating the World”, which has become much cited in boardrooms all over the world.

So, famous, smart, rich then. In case you were wondering, this is not a salacious article about his downfall. I hope it never happens because he has been extraordinarily prescient in reading the future of tech, and I am a bit of a fanboy.

Anyway, on 6 June, Andreessen published a 7,000-word blog post that sort of broke the internet. Or at least that part of the internet chattering and arguing and fussing about AI, which sometimes seems like all of it. The blog was optimistically entitled “AI Will Save the World”. And, after having fallen prey to AI pessimism and we’re-all-gonna-die narratives of late, this was a refreshing and convincing view for me.

His thesis unfolds like this:

There is an overwhelming body of research showing that higher (human) intelligence results in lots of good things, both collectively (for humankind) and individually (for the lucky smart person).

“The most validated core conclusion of social science across many decades and thousands of studies is that human intelligence makes a very broad range of life outcomes better. Smarter people have better outcomes in almost every domain of activity: academic achievement, job performance, occupational status, income, creativity, physical health, longevity, learning new skills, managing complex tasks, leadership, entrepreneurial success, conflict resolution, reading comprehension, financial decision making, understanding others’ perspectives, creative arts, parenting outcomes and life satisfaction.

“Further, human intelligence is the lever that we have used for millennia to create the world we live in today: science, technology, maths, physics, chemistry, medicine, energy, construction, transportation, communication, art, music, culture, philosophy, ethics, morality. Without the application of intelligence on all these domains, we would all still be living in mud huts, scratching out a meagre existence of subsistence farming. Instead, we have used our intelligence to raise our standard of living on the order of 10,000 X over the last 4,000 years.”

Then Andreessen goes on to paint AI as the most powerful human intelligence augmentation tool yet developed. He describes the prospect of a child with an infinitely patient and empathic teacher who can spot gaps in knowledge and gently fill them. Or the scientist with a genius AI colleague who knows all the latest developments in proximal fields that our scientist does not, and who can immediately spot and suggest relationships between them. He even suggests that AI will, above all, be humanising, allowing us to achieve our greater visions in science and art which would have been otherwise out of reach.

You get the gist. This list of utopian AI outcomes is not new, it has been promised for decades, only now we can see it shimmering out there on the horizon.

But the core of his essay is in his determinedly swatting away the AI risks that are so often raised, and this is where the essay really shines. Going through each in detail would make this article too long for good taste, but here is the summary:

Risk #1: Will AI kill us all?

Nope. This is a hand-wavy and anti-scientific prediction based on the fallacy that AI will be able to formulate and attain its own internal “goals and objectives”, and one of those goals will be to off us all. All without us noticing. AI is maths and software created and owned and controlled by us, and any emphatic statement about autonomous and uncontrollable AI goal-setting is unaccompanied by any evidence, even by those (very) smart people who have taken this position.

Risk #2: Will AI ruin society?

This is the much debated “AI alignment” problem. How do we constrain AI to support only values which benefit human society? Which raises an impossible hurdle. Whose values? Ours? North Korea’s? China’s? Stern hall monitors of what are assumed to be “human values” will certainly ask for policing duties but, as we have seen in the US culture wars, it simply does not work except in small bubbles of the like-minded. And the world is not one bubble. Beware the hall monitors. They are more dangerous than AI; they will be eager to tell you what to think.

Risk #3: Will AI take all our jobs?

No, is the very short answer. Based on hundreds of years of economic data about new technology-sparked job panics versus the actual jobs created by those same technologies. And AI will be no different. It will reduce the marginal cost of production, thereby lowering costs of goods, thereby providing more discretionary capital to citizens, thereby increasing demand, thereby stimulating investment, thereby increasing employment. A long causal chain, I know, but well supported in economics.

Risk #4: Will AI lead to crippling inequality?

Here Andreessen argues, perhaps a little less convincingly, that it is not the roll-out of new technologies that increases inequality, but the reverse. The resistance to new technologies often comes from monopolistic corporations and government regulators – an attempt to ward off competition and to keep control. It is the centralisation of wealth and power that is the real problem. Decentralised and low-cost access to something as powerful as AI will reduce inequality by widening access to productive services.

Risk #5: Will AI lead to bad people doing bad things?

This is more than a risk – it is a certainty, given the darkness in the hearts of men. Andreessen makes two points. The first is that AI is just a tool, and tools have always been available to both the virtuous and the venal. And the second is that AI is already out there in the world. Open-sourced code and research papers and algorithms and how-to-videos. It cannot be banned, stopped, paused or restrained. And laws are already on the books to sanction bad actors and actions, which has always been society’s best defence. And, finally, the best mechanism we will have for policing bad actors in AI is… AI itself. Its ability to ingest data, see patterns and infer consequences. Potentially it will be the best crime protection tool we have yet seen.

Finally, Andreessen gets to the climax of his thesis – the greatest AI risk of all.

“The single greatest risk of AI is that China wins global AI dominance and we – the United States and the West – do not.”

I raised my eyebrows a bit here (why China and not Russia or North Korea or Iran? Or even the mega-corporations?). He explains – China has been vocal and emphatic about its world view. It is repugnant to most of us – 100% citizen surveillance and individual subservience to the state in every facet of their lives. China’s attempts to export its political system globally are already under way (It is unashamedly open about this goal) – anyone sceptical of this should read this terrifying piece of deep reporting. China also has the wealth, reach and staying power that most do not.

So what are we to do? Andreessen’s conclusion is not a surprise. Do not regulate AI. Do the opposite. If you are a government or private industry, lavish money on it and let it thrive and stop China from winning.

And that, he concludes, is how AI will save the world. DM

Steven Sidley is a Professor of Practice at JBS, University of Johannesburg. His new book It’s Mine: How the crypto industry is redefining ownership will be published in August 2023.

After AI literally fabricated cases, case numbers and legal opinions, plus three tests that ChatGPT failed that a 16y old science student or computer science student could clear in under a minute, I am unconcerned that AI poses a threat to human intelligence.

Yes, there is the worry about the mayhem it can sow in social media, but that was anyway a place for fools.

How good was AI at this 5 years ago? How good will it be in 5, 10 years?

Wow! What a brilliant article. Thanks Steven.

Your point 1 is flawed.

And point 1 is what all the AI researchers are freaking out about. And thats that no one really knows what AI will do. Its not that we know it has goals, its that we dont know that it cant have goals. Neural networks have emergent properties, this means they have outcomes that are not planned and expected for. We have repeatedly seen this time and time again, it can do things that the creators never expected or knew it could do.

When you create something that you dont understand, the possibility of it doing things you dont expect rise dramatically. And if some of those unexpected emergent properties are things like “having its own goals”, or “developing its own internal set of ethics”, then we need to make sure those goals and ethics dont include wiping us out.

You simply can not have a scientific approach to something that you have no data on. Expecting scientific proof that AI wont develop its own goals and motives is like asking for scientific proof that there are no rocks shaped like Mandela’s face under the oceans in Europa. Its only after rigorous exploration that you can make a claim, and right now we have barely started exploring what AI can do.

GTP 3.5, the AI that woke the world to AI was only released in Nov 2022.