OP-ED

South Africa’s AI blind spot may have repercussions for the economy in our changing digital world

Failure to develop a national Artificial Intelligence strategy risks leaving South Africa out in the cold as the wave of innovation and productivity gains become available in the years ahead, leaving the country without a foothold in a rapidly changing digital world.

Artificial intelligence (AI) is causing significant structural changes to global competition and economic growth. With the potential to generate trillions of dollars in new value over the next decade, there is a risk that this value will not be easily captured or evenly distributed across nations.

Participating in the value generated by AI will depend on how governments and industries invest in the underlying computational infrastructure that makes AI possible.

The completeness of a country’s national AI strategy forecasts that nation’s ability to compete in the global digital economy. Yet a blind spot means that few national AI strategies reflect a robust understanding of domestic AI capacity, how to use it effectively, and how to structure it resiliently. This is similar to rolling out a national health strategy without knowing how many hospitals are available. It creates a divide between countries in relation to their ability to compute the complex AI models that lead to competitive advantage – so-called “compute divides” that can stifle innovation across academia, industry, and start-ups.

To help countries grapple with the issue, the Organisation for Economic Cooperation and Development (OECD) has published the first blueprint to help countries plan for AI compute capacity that meets the needs of national AI strategies.

A major stumbling block is that there is not enough data for countries to answer three fundamental questions: How much domestic AI compute capacity do we have? How does this compare to other nations? And do we have enough capacity to support our national AI ambitions?

This presents the risk that national AI plans remain aspirational, and not detailed enough to be operational. A few countries have announced initiatives to increase compute available for research and academia, including the United States National AI Research Resource and Canada’s Digital Research Infrastructure Strategy. Canada and the United Kingdom have also begun needs assessments for compute infrastructure more broadly, but planning for specialised AI compute needs across the economy remains a policy gap. South Africa has not undertaken any such assessment.

According to Celine Caira, economist in the OECD AI Unit, “Policy makers need good data to make good policy. When it comes to AI compute, there is a measurement gap. Countries don’t know how much they have, nor how much they need. We convened the OECD.AI Expert Group on AI Compute and Climate to help fill this gap and provide countries with tools to plan their AI infrastructure today and in the years ahead.”

Special infrastructure needs for AI systems have grown dramatically over the last few years, especially for deep learning and neural networks.

The relative lack of attention to this issue can be explained, at least in part, by the very technical nature of what AI compute is and how it works, which often requires a level of training and education beyond that of most policy makers. Another reason for the lack of focus on AI compute may be that many government officials mistakenly believe that AI compute is commoditised and easily sourced, as is the case with traditional information technology (IT) infrastructure, given that commercial cloud providers are now widespread and offer robust services at scale. This idea of “infrastructure as a service” includes computing for AI, so the public cloud is duly credited with democratising access. At the same time, it has created a state of complacency that AI compute will be there when we need it. AI, however, is not the same as IT, and has special infrastructure needs.

The compute needed to train modern machine learning systems has multiplied by hundreds of thousands of times since 2012 (OpenAI, 2018; Sevilla et al., 2022). For machine learning based AI systems, there are two key steps involved in their development and use that are enabled by compute: 1) training, meaning the creation and selection of models/algorithms and their calibration, and 2) inference, meaning using the AI system to determine an output. Training is usually a more complex process in terms of memory and compute resources. Given the significant data and compute requirements, training is more likely to be conducted on centralised, high-performance computers. In contrast, AI deployment is more variable regarding AI compute requirements. Inference is frequently conducted on computationally less powerful devices, such as smartphones.

Without the right compute capacity, countries will miss out on the competitive advantages and productivity gains AI innovations bring.

With all the hype around ChatGPT, South Africa has neither the compute nor engineering capacity to train such a large language model. Relatedly, the ability to train large foundation models to unlock novel therapeutics for example, to treat undruggable diseases in cancer, neurodegeneration, infectious disease, and other areas, is out of reach. These models are used to build AI-augmented structural biology tools such as AlphaFold (Jumper, et al. 2021) to transform the design of RNA-targeted and RNA-based medicines. The models are integrated into a virtuous cycle with purpose-designed, in-house wet-lab assays, to discover and design RNA drugs through tightly coupling both algorithmic development, large-scale data generation, and compute.

Further, training and deploying AI systems can require massive amounts of computational resources with their own environmental impacts through energy and water use, greenhouse gas emissions, and overall life cycle impacts. To fully harness AI technologies to achieve domestic economic growth goals and to help meet national and global sustainability goals, policy makers, civil society and private sector actors need accurate and reliable measures of the environmental impacts of AI. However, there is currently a lack of consensus on benchmarks and a shortage of data in this area, meaning such information is not available for evidence-based decisions.

Visit Daily Maverick’s home page for more news, analysis and investigations

Comparatively, divergence in AI compute capacities can reinforce existing socioeconomic divides within countries between sectors and regions, but also on an international level.

According to the November 2022 Top500 list (Top500, 2022), there are only 34 countries in the world with a “top supercomputer”. Such supercomputers are primarily used for science, but in recent years some have been updated to also run AI-specific workloads, although the list does not distinguish supercomputers specialised for AI.

According to this list, the US has the highest share of total computing power (44%), with 127 supercomputers on the list (25%), while China leads in the number of supercomputers (162 or 32% of the list) but only represents 11% of the list in terms of total computing power. This shows that counting supercomputers does not give a full picture of national compute capacity, as some supercomputers are more powerful than others. Apart from leading countries (the US, China, countries from the EU27, the UK, and Japan), the rest of the world makes up 12% of the supercomputers on the list, with countries from the Global South sparsely represented.

Nearly 90% of top supercomputers on the list were developed in the last five years, highlighting the speed with which computing hardware, infrastructure and software are being developed and brought to market.

Confirming this opportunity gap, a study (Ahmed, et al. 2020) found that universities ranked 301-500 by U.S. News and World Report have published on average six fewer papers at AI research conferences — or 25% fewer than a counterfactual estimator — since the rise of deep learning. Fortune 500 companies, Big Tech leaders, and elite universities, often receiving large private sector resources and endowments, saw dramatically different trends.

The study found that an increased need for specialised equipment can result in “haves and have-nots” in a scientific field. “We contend that the rise of deep learning increases the importance of compute and data drastically, which, in turn, heightens the barriers of entry by increasing the costs of knowledge production,” the paper reads.

Likewise, a report (UK Government Office for Science, 2021) on large-scale computing noted that many smaller research centres and businesses have difficulty gaining access to large-scale computing platforms in the UK, which curtails the scope of their AI development.

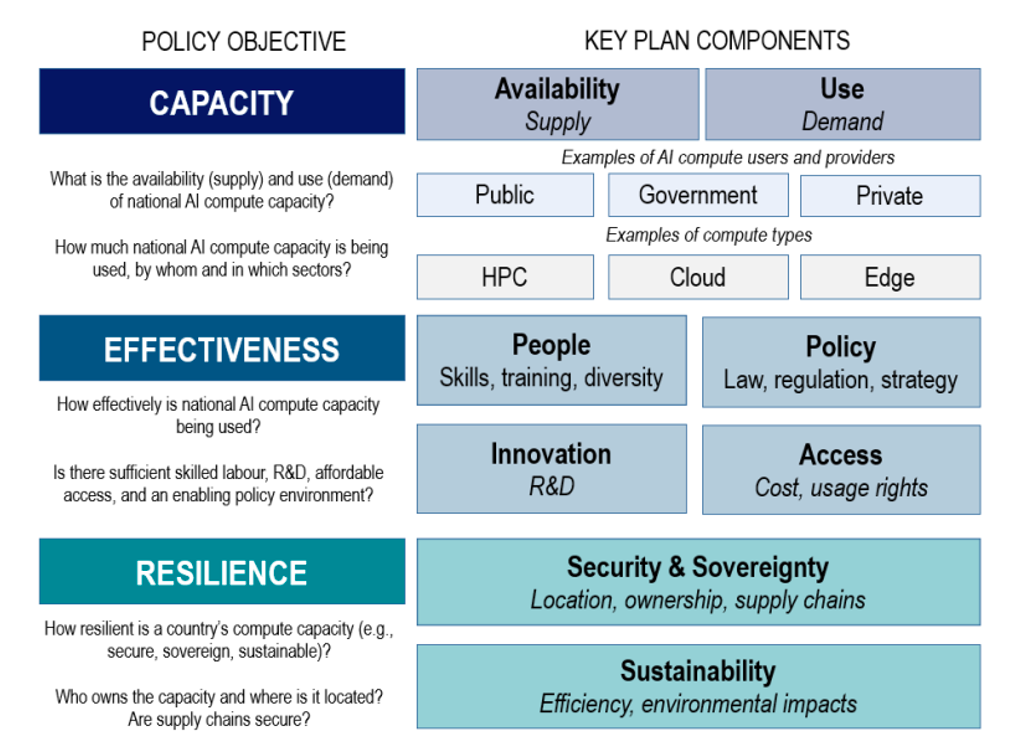

The blueprint helps countries consider AI compute’s capacity (availability and use), effectiveness (people, policy, innovation, access), and resilience (security, sovereignty, sustainability).

Blueprint for national AI compute planning. (Source. OECD.AI Expert Group on AI Compute and Climate)

The blueprint identifies steps governments can take to measure and benchmark domestic AI compute capacity for future planning:

- Include AI compute capacity in national AI policy initiatives.

- Expand national and regional data collection and measurement standards.

- Provide insights into the compute demands of AI systems.

- Differentiate AI-specific measurements from general-purpose compute.

- Ensure access to AI-compute-related skills and training.

- Analyse and map AI compute supply chains and inputs.

To keep up, South Africa must plan its AI infrastructure.

Perpetuating marketing narratives around the “fourth industrial revolution” while paying little attention to the hardware, software, and related compute infrastructure that make AI advances possible, will bring little benefit to individuals and institutions in South Africa.

Government, industry, and academia in South Africa must act by measuring and planning for the computational infrastructure needed to advance AI ambitions. The future of the economy is at stake to harness the power of data responsibly to boost productivity, create new businesses and jobs, improve public services, support a fairer society, and drive scientific discovery. Failure to do so will result in South Africa taking a back seat in the wave of innovation and productivity gains in the years ahead. DM

Gregg Barrett is the CEO of Cirrus, Africa’s AI initiative. He is a member of The OECD.AI Expert Group on AI Compute and Climate.

Thank you for this insightful contribution. I believe that like so many technological innovations, AI has the power to either bridge or widen socio-economic divides. The lack of a coherent South African strategy or plan for an AI infrastructure increases the danger of us sliding down the rabbit hole of a dystopian future where technology becomes all powerful in the hands of a few. We should be aware that the ‘People’ component of the Blueprint for AI infrastructure is extremely critical in South Africa. We need people with the relevant IT skills to build and maintain the AI infrastructure and develop models, tools and apps that are relevant in the South African context. And for AI innovations to contribute to an inclusive society we also need ethicists, lawyers and governance specialists who understand what the impact of AI could be on society and prepare adequate regulation and governance around it. Moreover we all need to be digital citizens who are aware of the technological culture in which we live, know how it influences our lives and have a say in the design and use of it. All of these skills and attributes are in very short supply in our country and considering the limited scope and speed of current interventions I don’t hold much hope that this will remedied in the short term.

Your call for a strategy for South Africa’s AI infrastructure should go hand in hand with Professor Tshilidzi Marwala’s call for a skills revolution in his contribution from 4 October 2022.