DEVELOP AI OP-ED

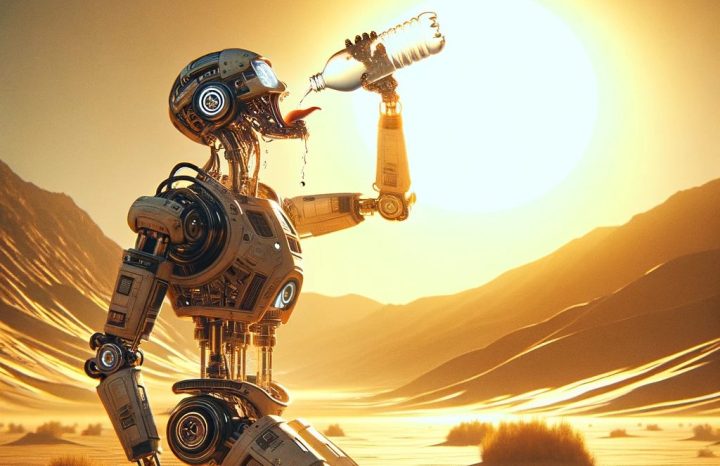

Critical impact — ChatGPT consumes 500ml of water for every 50 texts you send it

As we ramp up towards a world of constantly generating content with AI, we are being asked to consider the environmental cost of its production.

When the Internet undersea cables on the West Coast of Africa — that bring our streamed episodes of Suits into our homes — broke the other week, it reminded all of us that our intangible digital world and the fancy AI we all love still needs kilometres of chunky metal to work.

Similarly, as we ramp up towards a world of constantly generating content with AI, we are being asked to consider the environmental cost of its production.

According to a paper published late last year, it is estimated that ChatGPT is thirsty for 500ml of fresh water to generate between five and 50 prompts or questions. The range varies depending on where its servers are located and the season. The estimate includes indirect water usage which is needed to cool power plants that supply the data centres with electricity. And, frankly, this is only the beginning…

The big guys can’t hide how much more water they now need. In this environmental report, Microsoft said that its global water consumption spiked 34% from 2021 to 2022 (to over 6 billion litres). This is a sharp increase compared to previous years and researchers reckon this has to do with all its work with AI.

Shaolei Ren, a researcher at the University of California, Riverside and one of the authors of the paper cited above says that training GPT-3 in Microsoft’s state-of-the-art US data centres used 700,000 litres of clean freshwater, but the real problem is going to come when the public becomes increasingly obsessed with asking their AI assistants questions.

The paper says the global AI demand may mean we withdraw between 4.2 and 6.6 billion cubic metres of water in 2027, which is currently half of the total water that the UK uses in an entire year. I had to look this up, but a cubic metre of water is the same as 1,000 litres.

And addressing these problems doesn’t bode well when we also expect our AI models to be increasingly faster and better. We can reduce computational intensity (and so reduce the environmental impact) by sacrificing some precision and accuracy in our models. This process is called quantisation and may seem like a step backwards, but not every use case needs the model to be working at the highest level, so being able to moderate this will reduce the footprint to a degree.

A positive is that models do use less energy after they have been trained, but a negative is that an AI can forget what it has already learned as it learns new things (which reminds me of when Homer learnt how to make wine and forgot how to drive) and so an AI may need to be taught a second time (and so consume additional power).

As you can imagine there is a whole cottage industry around “green software” that has sprouted up during AI’s ascent. But the real pressure, that might save the environment, is going to be the cost to businesses.

Every time your piece of software needs to access an LLM like ChatGPT you have to pay. So building an AI chatbot at the moment is cheap, but if it becomes wildly popular then it can be pricey for you to maintain.

When the chatbot on your website needs to send a message to ChatGPT and get an AI-generated response you are charged. As a result, already in the bot world, you are seeing a hybrid of automated conversations (with preplanned responses), AI-generated conversations and the option for a human to jump in and take over.

The chatter around the environmental impact of AI is going to increase and, as usual, people don’t want to sacrifice what they see as progress, but they also always want to save a few bucks. This thrifty nature, at least, could slightly curb the environmental chaos that’s coming.

In the news…

- Apple has been frustratingly claiming Siri to be more than it is for years. Their new AI move ReALM may actually reverse Siri’s lobotomy and make it useful. The model is tiny compared to GPT-4, but that is because all it has to do is reconstruct your iPhone’s screen and label each part of what you are seeing with text. This text can only be seen by the phone’s voice assistant that is ready to receive your instructions. So if you are scrolling through a website you can ask Siri to “call the business” and it will be able to “see” the phone number and ring them. I mean, that is great… but Siri launched back in 2011 and if a voice assistant can’t do this sort of task then there has been no point in us tolerating it for over a decade.

- Jon Stewart finally got his boot in around AI, though it felt rather toothless. For a better analysis of AI and disinformation read this by Julius Endert.

DM

Develop Al is an innovative company that reports on AI, builds AI-focused projects and provides training on how to use AI responsibly.

Subscribe to Develop AI’s newsletter here.

It’s exhausting to even contemplate writing such nonsense, prompting one to ponder the environmental footprint left by composing this on a laptop. From electricity usage to the so-called renewable power sources, one must consider the recycling cost of those solar panels.

Also important to remember the vast energy requirements for AI in addition to the already vast energy needs of data centres for the cloud. I’m guessing the water is for cooling all the tech.

Absolutely, you’re right about the energy demands and, you’re right, the water is to keep everything cool and working.

Why does it have to be fresh clean water though. Surely seawater can be churned to cool these centres?

Salt water is corrosive and it is also more conductive (which means you are more likely to get electrical short circuits).

The good news is that we can expect the semiconductor industry to adapt to the changes in computational demand with smaller lithography and computational architectures better suited to the demands of AI, just as they have with the GPU industry. That will result in better efficiencies and lower environmental impact per query.

I completely agree. This is a great point.

Everyday that goes by where Elon is not on Mars we’re not housing data centers on the poles.

This article is a bit strange in claiming that AI is used merely “to generate content”. ChatGPT4 is like having a super power. In work productivity, it’s like having gained an extra day per week. I hardly ever use Google anymore.

“I am lazy to think and write(type), I will outsource 75% of it to chatGpt to do it for me”