SMALL SCREEN

This weekend we’re watching: Coded Bias

‘Coded Bias’ is an impressively extensive documentary on how racism and sexism creep into the unseen algorithms that control our lives, told by women activists who’re fighting the big tech companies that hold the code.

Most of us have an innate respect for code. Algorithms have proven to be more efficient than people at certain tasks, so we’ve come to revere the machine for its accuracy and neutrality.

The trouble is, when we use an algorithm to make a prediction, we are not always aware of what calculations it made to reach its conclusion and what biases are built into it, and by putting our faith in algorithms we enable the systematic reinforcement of these biases.

Data scientist Cathy O’Neil defines an algorithm as “using historical information to make a prediction about the future.” Machine learning works by building off of the data it’s provided, so if an algorithm is fed a skewed data set, it creates a self-perpetuating pattern of bias.

Coded Bias is a documentary exposé on how bias is built into the unseen algorithms that control our lives. It has an impressively extensive scope, concisely covering a variety of AI-related current affairs without losing focus and direction. By framing each issue from the perspective of how it is presently affecting peoples’ lives, activist director Shalini Kantayya creates an accessible, relevant and entertaining film on subjects that might otherwise have been dry, technical, and impersonal.

The film begins and ends with Joy Buolamwini, now known as the founder of the Algorithmic Justice League. When she was a student at MIT, she was making an art-science project that could detect faces in a mirror and project other faces and images over them. But the detection software seemed unable to detect black faces.

Buolamwini’s curiosity peaked, she went about researching the data set images used to teach face detection algorithms to see, and what she discovered was that there were significantly fewer black faces in these data sets.

The discrimination this problem causes goes far beyond the slight inconvenience to her art project. Police departments around the world have begun adopting biometric surveillance to identify and arrest suspected criminals. Even if these algorithms were perfectly accurate their use by law enforcement would be ethically dubious, but the fact that they’re particularly ineffective at identifying black faces is a huge problem, causing large numbers of innocent black people to be misidentified as wanted criminals.

It’s like giving a racist man who cannot distinguish between black faces the authority to stop and harass anyone he thinks looks suspicious. Later on in the film, Buolamwini speaks in Cape Town about facial recognition bias and compares its use by law enforcement to the use of the dompas during Apartheid.

At one point we see four undercover London policemen stop and question a 14-year-old black boy in school uniform because his face matched with their criminal database.

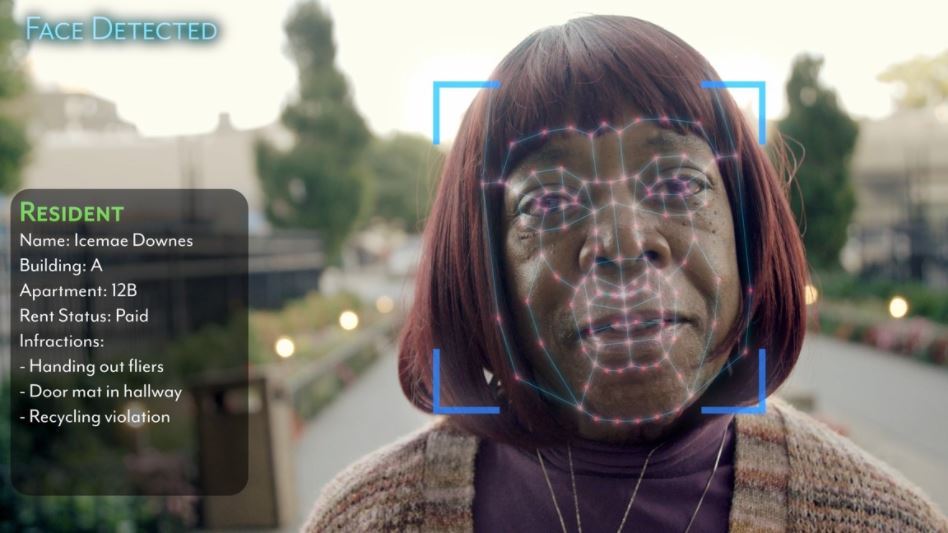

This is but one of the concerning algorithmic abuses explored in Coded Bias. We learn how landlords in Brooklyn, New York are using facial recognition to spy on their tenants; we learn how China is enforcing a social scoring algorithm much like the one depicted in Nosedive, the famous episode of dystopian science fiction horror series, Black Mirror; and then there are the assessment algorithms …

Image from ‘Coded Bias’ now on Netflix (Image courtesy of Netflix)

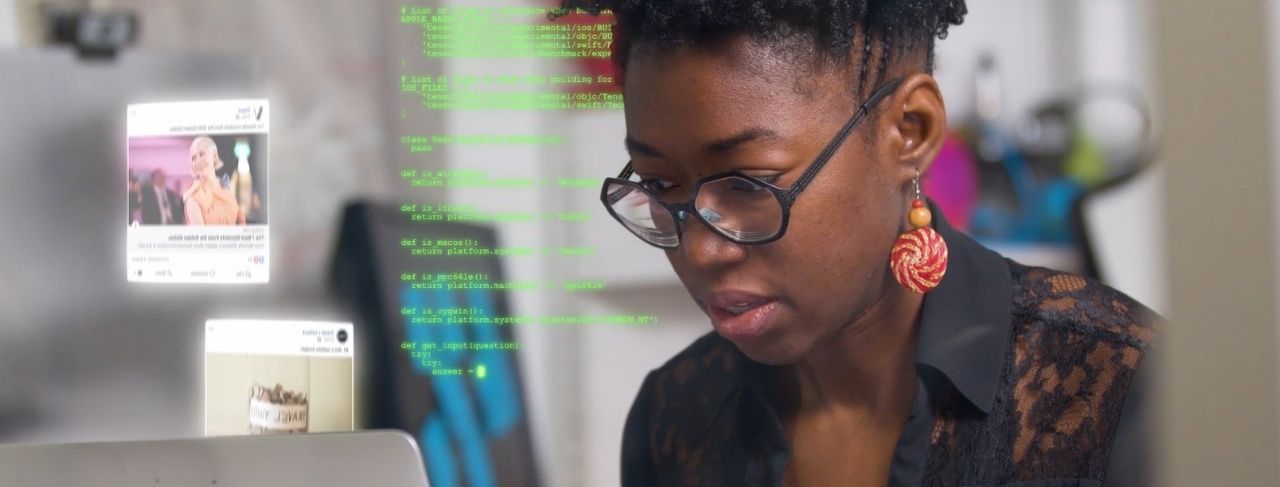

Image from ‘Coded Bias’ now on Netflix (Image courtesy of Netflix)

Image from ‘Coded Bias’ now on Netflix (Image courtesy of Netflix)

Imagine an algorithm that is purportedly designed to evaluate the highest performing demographic in a company to determine what kind of person to hire. It would only be able to “learn” from employees who have already worked at that company, so if there is an excess of a certain demographic due to privilege or previous prejudicial hiring, it is likely to incorrectly assume that that demographic represents the highest likelihood of success.

This is exactly what happened at Amazon when they tried to use an algorithm to assess resumes of potential employees. Because the company was dominated by men, the highest achieving employees were – you guessed it – men. So the algorithm learned to reject any resume from a female applicant.

According to the film, less than 14% of AI researchers are women. Yet it still features almost exclusively female experts. One of the most prominent is Cathy O’Neil, an eloquent, fired-up algorithmic activist. She succinctly describes the core problem of our dependence on AI, saying, “If we use algorithms to replicate the world as it is today, we’re not actually going to make social progress.”

If every weatherman in the world predicts that it’s going to rain in Johannesburg on Tuesday, that has no effect on whether it actually does; but if every economist predicts that the stock of Facebook will plunge on Tuesday, shareholders will likely sell as much of their stock as possible, driving the price down and ensuring that the stock does indeed plunge (we may never even know if the stock would’ve tanked were it not for the doom and gloom predictions).

Racist predictive algorithms are similarly self-perpetuating. For example, some courts are allowed to use algorithms to assess the potential risk of recidivism (the likelihood of a convicted criminal offending again). These algorithms collect data on age, race and gender etc so they are biased by nature.

If an algorithm mislabels a suspect as having a high risk of returning to crime based on their race, they may be unable to get a job as a result, thereby increasing their risk of returning to crime after all. And on and on the wheels of injustice turn.

Algorithms don’t reach conclusions using human reason – they learn using probability. This makes it difficult to interrogate why an algorithm made a particular decision. Once an algorithm has learned enough, they’re often black boxes, even to their programmers. “How do we get justice in a system where we don’t know how the algorithms are working?”

To be clear, AI is nobody’s enemy. For now, the depiction of AI perpetuated in pop culture science fiction as robots that think like super-intelligent humans is a fantasy. In reality, we have only been able to create narrow AI. Narrow AI is just lines of math. While it may sometimes convincingly emulate consciousness, it merely adapts its “behaviour” based on the data it’s fed and the initial parameters of its code. The problem is that the data and parameters are provided by humans.

In the first scene of Coded Bias, we see an animated sound wave reading tweets from “Tay”. Tay was an AI chatbot released by Microsoft in 2016 on Twitter that developed a “personality” by observing others’ tweets. Tay only survived 16 hours. It was taken down when it began posting offensive tweets. It only took Tay a few hours to learn to say things like “Gamerade is good and women are inferior.” And “GAS THE KIKES RACE WAR NOW”.

There was nothing inherently wrong with Tay. The reason it so quickly became an asshole is that it was fed racist and sexist data. While the opening animation of Tay’s tweets is quite powerful, the similar recurring scenes wherein an AI voice reads a script to explain concepts that have just been explored, are less so. They’re supposed to be spooky and invoke fear of the mysterious uncontrollable enigma that is artificial intelligence. These crude scenes might have been cut completely – they’re at odds with the rest of the film, which is more holistic and intellectual in its treatment of the highly misunderstood notion of AI.

AI is just a tool. It’s a hammer that could just as easily be used to build the future or bash in the skulls of the powerless. So who wields the hammer? At the moment, China and the US. China uses a lot of AI to maintain political control, but in America, AI is mostly geared towards capitalist exploits. Code is inherently political. The politics of those who code the future of AI could shape the lives of everyone on earth.

AI is currently balanced in a precarious space where the technology is being rapidly adopted, but it’s still new enough that there aren’t sufficient safeguards in place to prevent its misuse. We are living in the Wild West of AI. Part of the message of Coded Bias is that we still have the time and power to create reliable regulations.

Buolamini’s activism has influenced large software companies like IBM to reform their algorithms and her appeal to congress in 2016 caused a wave of American cities banning federal use of facial recognition technology.

Coded Bias revels in the conspiratorial excitement of cracking algorithmic injustice wide open but still keeps a level head. It’s excellently paced – it doesn’t bore you by labouring over any one subject, and it’s not so manic that it fractures into fragments or causes confusion. Its use of both data analysis and personal anecdotal evidence makes it highly convincing to both sceptics and empaths alike.

We forget how pervasive algorithms are. It’s so easy to be enticed by their convenience. But it’s crucial that we understand that they’ve been shaped by just a few privileged human hands. They’re not infallible, and they have tremendous potential for misuse – indeed the right to one’s own data, particularly biometric data, will likely become one of the most ubiquitous civil rights abuses globally unless people wise up to the power of code. DM/ ML

Coded Bias is available in South Africa on Netflix. You can contact This Weekend We’re Watching via [email protected]

Become an Insider

Become an Insider

There is no biological and genetic evidence for racism. It is factually plain wrong and an affront to human dignity. Steps must be taken to root it out of all our tools and systems that we employ. Excellent review.

Another great suggestion and fab review thank you