Sci-Tech

Scientists pull no punches in teaching robots to do no harm

With power comes responsibility, and this is a lesson that even robots need to be taught or, more correctly, have programmed into their circuits. As robots are increasingly designed with direct human interaction in mind, it becomes all-the-more important that they “respect” the rules. But to obey Asimov’s first rule, scientists are initially experimenting with breaking it.

The development of robots capable of human interaction may be the domain of scientists, but as in so many other scientific fields, ethical concerns demand to be taken into consideration. Forget nightmarish visions of robots going on a wild killing spree and think instead of more quotidian situations – would you trust a robot helper in your kitchen if it were carrying a knife? And how about a robot server bringing you snacks? It may not be armed as such, but if it bumped into you, it could cause a nasty injury.

In 1942, science fiction maestro Isaac Asimov formulated the three laws of robotics in a short story called Runaround (his editor, John Campbell, also played a role in formulating them – Ed). These three laws have long since moved beyond the fictional realm and, in fact, underlie much of the real-world research in Human-Robot Interaction (HRI). If you haven’t read, or reread, Asimov recently, here’s a refresher:

- A robot may not harm a human being;

- A robot must follow the orders given to it by a human being (unless this is in conflict with the first law); and

- A robot must protect its own existence (as long as that doesn’t violate the first two laws).

Humans may agree in principle that these laws are A Very Good Thing (megalomaniac dictators who’d be quite happy to have drones do their evil bidding excluded), but how do we program the robots to obey them?

Researchers in Slovenia are experimenting using a method that seemingly goes against the letter of Asimov’s three laws, but is very much conducted in that spirit. A recent study at the University of Ljubljana involves a robot repeatedly hitting people on the arm at varying levels of intensity. This practice is in direct contravention of the first law, but the scientist who conceptualised the research, Borut Povše, insists that it’s all for a good cause.

Povše’s research, which was presented at the IEEE’s Systems, Man and Cybernetics conference in Istanbul this week, studies the robot-human pain threshold, with the ultimate intention of programming robots so they don’t cross this boundary. Six of Povše’s male colleagues were recruited as test subjects, although it’s unknown whether they offered themselves up in the selfless spirit of guinea pigs, or to prove their macho qualities. (If the project was being conducted in the US, we’d be pretty sure they’d have signed a reality TV deal to help fund it.)

In the experiments, robots travelling at different speeds, and holding various objects, hit the six volunteers on their arms. The “victims” were then asked to describe their pain levels. Further tests, involving greater impact force, will be conducted using artificial arms, to prevent human injury. Once they’ve established an outer speed limit for how fast robots should move when around humans, scientists will be able to program these constraints into robotic circuits and thus prevent potentially nasty injuries.

Despite its practical application, the unorthodox method used in Povše’s experiment will no doubt provoke much discussion in the roboethics community. Asimov could be said to be the father of roboethics, but in the 21st century this increasingly interdisciplinary field has gained more serious academic attention. It’s no longer only a philosophical question to be explored through fiction, but among other things, a practical debate concerning applied ethics. Some scientists contend that they bear no moral responsibility for their technical research, and aren’t much bothered about the issues involved. However, others are concerned about the implications of human-robot interaction, and keen on exploring the issues around this, as well as drawing up moral, legal and ethical guidelines.

The time when the average person will have a personal stake in this debate is sooner than one might think: It’s predicted that every household in South Korea will have a robot as soon as 2020. Research that’s geared towards improving the safety of human-robot interactions is thus imperative. Povše’s experiment may have Asimov purists up in arms, but its end goal seems sound – to set practical, tested standards that will improve safety in human-robot interactions. By the time we all have our own “pet” R2-D2, hopefully we needn’t worry about them harming us – intentionally or not. DM

Read more: New Scientist, Wikipedia.

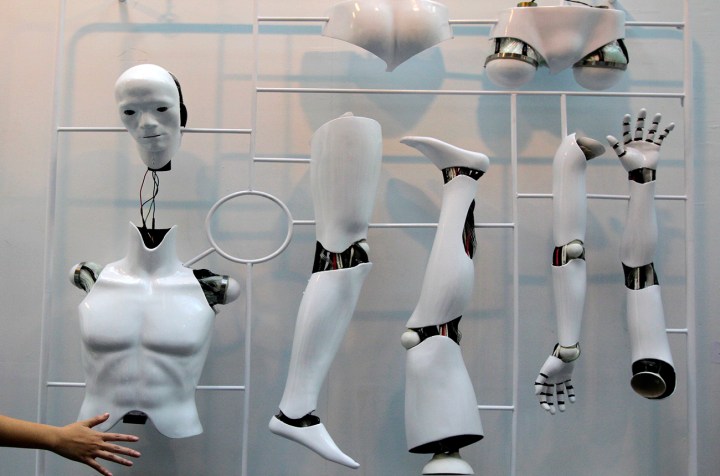

Photo: A visitor waves in front of the sensor that triggers the joints of a dummy that is part of “PWM1”, an art creation by Taiwan artist Huang Zan-lun, during the Art Taipei 2010 August 20, 2010. Art Taipei 2010 featuring 110 galleries from 11 countries will be held from August 20-24 at the Taipei World Trade Centre. REUTERS/Pichi Chuang

Become an Insider

Become an Insider