BOTSPOTTING

Sockpuppets trolling Helen Zille turn out to be nothing more than a faulty analytical tool

A botspotting analysis using a single, inaccurate tool had prominent South African politicians levelling claims of co-ordinated social media manipulation against one another.

A recent analysis by botspotting service Twitter Audit of the followers of Helen Zille, federal chairperson of the Democratic Alliance (DA), revealed that nearly half of her 1.4 million followers are “fake”. Zille accused her political opponents of running these accounts to manufacture outrage against her and “destroy her online”.

DA federal chairperson Helen Zille accused her political opponents of orchestrating ‘bots’ against her. (Source: @helenzille/archive)

Although Twitter Audit is a popular and well-known botspotting service, research conducted in 2014 found that it has inherent limitations that render its analysis incomplete, especially for accounts with large numbers of followers. Its methodology, besides being opaque and undocumented, was labelled “flawed” by Twitter itself.

Twitter Audit should be used with its limitations in mind, and using it as the basis for claims of a co-ordinated social media campaign by political opponents presents a risk of over-prescription. While awareness of automation on social media platforms has drastically increased over the past few years, it has also created a tendency for users to label disagreeable content as the stuff of “bots”.

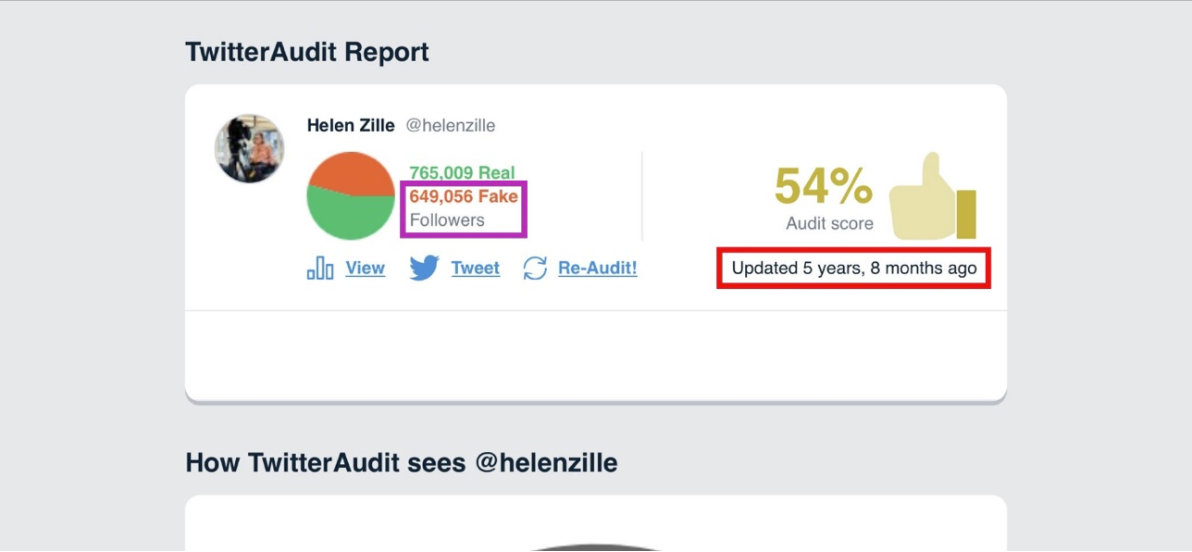

A screengrab of Zille’s earlier Twitter Audit report. Note the number of fake followers (purple) and the date of the audit (red). (Source: @fireflyluciferi/archive)

In its retort to Zille’s tweets, the Economic Freedom Fighters (EFF) stated that the fake accounts following Zille were created by the DA to shore up support for Zille online. Godrich Gardee, the EFF’s former secretary-general, also accused the DA of using the very same tactics against him, claiming he was “stalk[ed] and harass[ed]” by DA bots.

The EFF’s Godrich Gardee claimed that the DA created fake accounts to ‘stalk and harras’ [sic] him. (Source: @Gardeeodrich /archive )

Sockpuppets and spin doctors

Zille and Gardee’s comments were made within an information environment in South Africa that has a history of targeted online harassment of journalists, editors and politicians, especially using social media.

Social media manipulation was thrust into a stark spotlight in November 2016 when independent researchers in South Africa discovered scores of sockpuppet accounts attempting to triage the reputation of the Gupta brothers, well-connected businessmen with close ties to former president Jacob Zuma. (In December 2017, the DFRLab reported on seemingly US bots that were posting in an effort to sway elections within the African National Congress).

Sockpuppets are social media accounts that pose as something or someone else, often with the intent to deceive an audience and sway public perception. Many of these ostensibly South African accounts could be traced back to the Guptas’ home country of India, yet masqueraded as South African citizens. They created and amplified disinformation websites and co-ordinated personal attacks against anyone critical of the Gupta family. They were later colloquialised as the “Guptabots” and ultimately leveraged racial tensions to divert attention from their less-than-stellar public image.

It later transpired that the Guptas also commissioned British public relations firm Bell Pottinger to manage the family’s public image during this time as well through the family’s main holding company, Oakbay Investments. The Oakbay account eventually led to Bell Pottinger’s downfall when it emerged that the firm was using racially divisive tactics to sanitise the Gupta family’s public image.

Former Bell Pottinger employees admitted they created similar websites and social media accounts to leverage racial and class tensions for the Guptas’ benefit.

During this time, journalists, editors and politicians were frequently targeted by scores of these accounts, and exposés on their personal lives were published on anonymous websites.

A screengrab from the now-obsolete WMCLeaks website, used by the Guptabots to smear critics of the Gupta family. (Source: WMCLeaks/archive)

Although the experience sensitised many South African social media users to the threat of social media manipulation and its associated risks, it also instilled a tendency among social media users to label any opposing view as a “bot”.

Audit alteram partem

The reactions of Zille and Gardee are hardly surprising within this context, but both shot wide of the mark because they did not scrutinise the methodology underlying the analysis.

The Twitter Audit interface. (Source: Twitter Audit)

Twitter Audit is one of several online tools that claim to identify the fakes among an account’s followers. It presents a simple user interface that only requires a visitor to enter the username of the account to be analysed.

But how does it work? According to Twitter Audit’s website, each audit samples up to 5,000 Twitter followers for that user and calculates a score for each. This score is based on the number of tweets, the date of the last tweet and the account’s ratio of followers to friends.

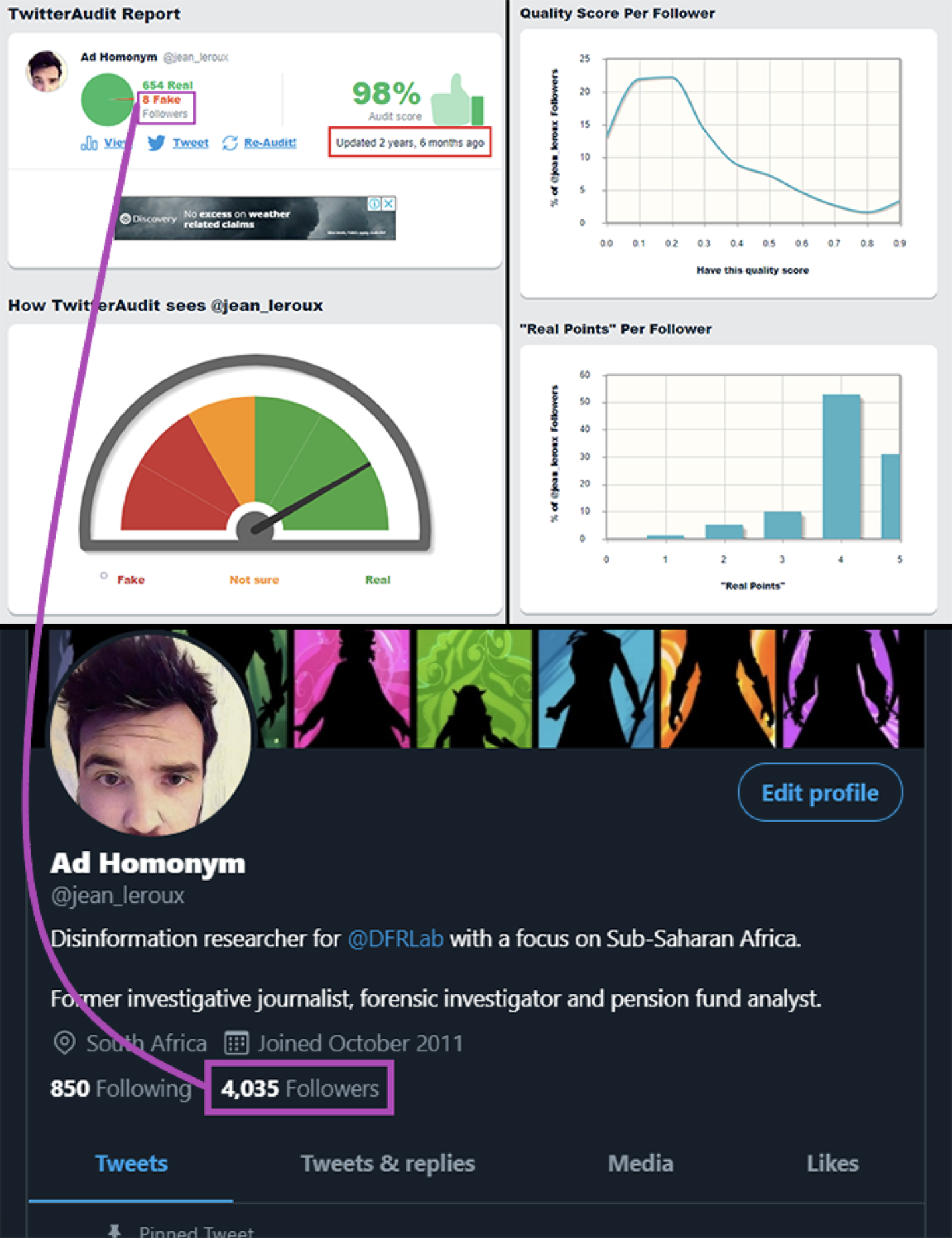

The Twitter Audit results page for the author. Note the aged date of the audit (red) and the inaccurate number of followers (purple). (Source: @jean_leroux/DFRLab via Twitter Audit)

Twitter Audit adds a disclaimer to its results though, citing that its “scoring method is not perfect but it is a good way to tell if someone with lots of followers is likely to have increased their follower count by inorganic, fraudulent, or dishonest means”.

The flaws in Twitter Audit’s methodologies were highlighted in a conference paper published in June 2014. The five authors criticised Twitter Audit in addition to three similar platforms and highlighted the inadequacies of these tools in programmatically identifying fake followers. They conclude that the results of these tools are at the very least questionable and that the platforms’ results likely lack any reliability.

Their research also found that Twitter Audit samples the last 5,000 accounts that followed the target of an audit and not a randomised sample of 5,000 followers. This injects a chronological bias into the sample that may influence the results when applied across the much larger following. For example, there is a higher likelihood of finding new accounts in a given account’s most recent 5,000 followers than in a randomised sample of 5,000 of the account’s followers.

While the study found that this sampling bias is not a concern for accounts with fewer than 10,000 followers, accounts with more than 10,000 followers quickly reached a point where the bias invalidated the integrity of the results.

Twitter Audit limits each account to only one audit. In order to refresh the analysis, or sample a larger following, a user must upgrade to one of Twitter Audit’s paid plans. This means the sampling could have been performed on an outdated sample that is no longer representative of the account’s followers.

The scoring system used by Twitter Audit makes use of three metrics to score a follower: the number of tweets made by the account, the number of followers and following the account has, and the date and time of its most recent tweet.

Twitter Audit then applies an opaque algorithm to these three metrics to arrive at a score out of five. Among the four tools the study tested, Twitter Audit was the only one that did not classify inactive accounts separately, and the study surmised that this leads to a higher proportion of legitimate, but inactive, accounts being included as fakes. They also noted that larger accounts displayed increasingly disparate results between the different tools.

Unless Twitter Audit releases the details of its algorithms, it is impossible to establish exactly how an account would qualify as a “fake” or not, and edge cases remain susceptible to false positives. Zille’s followers commented on this fact when she undertook to block all suspicious accounts on her profile, many of which claimed they need to tweet anonymously for fear of online reprisals.

In Zille’s case, the sample size of 5,000 accounts would amount to an insignificant 0.35% of her total followers (more than 1.4 million, as of January 14). Even Gardee only sampled less than 5% of his total followers.

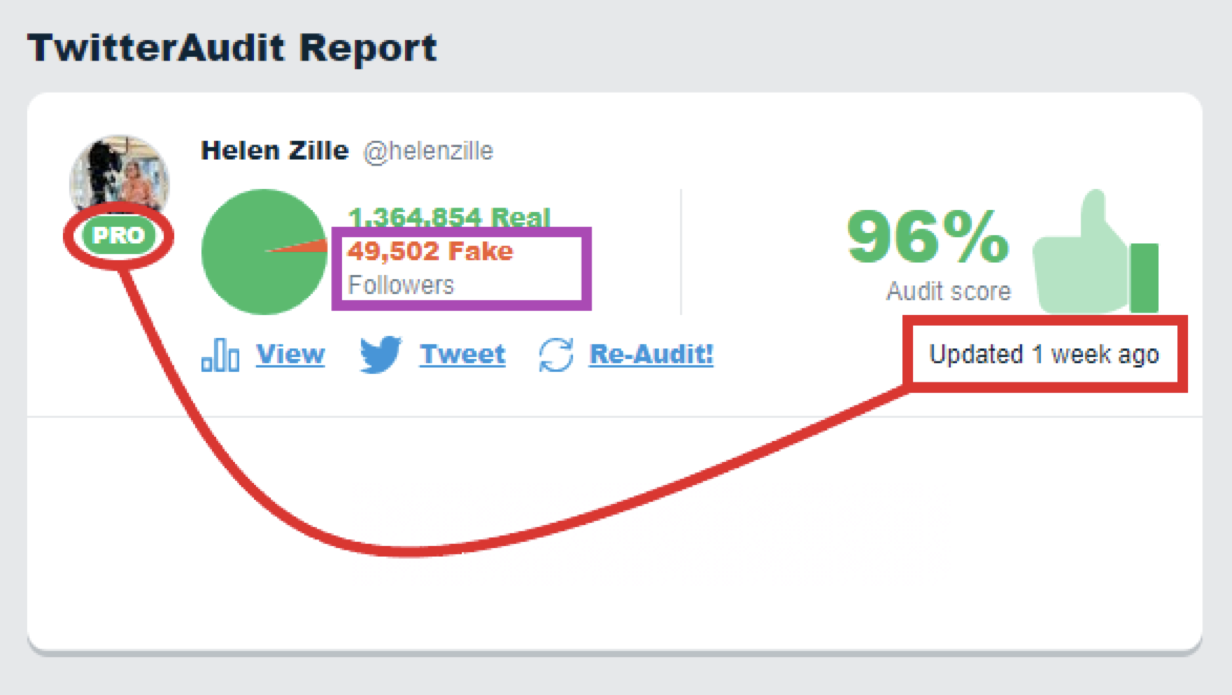

The analysis on which Zille based her accusation was performed nearly six years ago and would hardly be representative of her social media accounts today. In order to refresh the analysis of her account, Zille had to purchase one of Twitter Audit’s paid plans. When she did, the newer, paid-for analysis indicated her fake followers reduced to only 49,500, a 93% reduction from the initial figure of 650,000.

The new, updated analysis after Zille purchased Twitter Audit’s Pro option, as indicated by the ‘PRO’ label under her photo. Note the updated audit date (red) and the updated fake followers (purple). (Source: Twitter Audit/archive)

There is also another glaring problem with Zille’s claims: Twitter Audit and similar programs cannot divine intent. As much as an account may appear to be suspicious in its behaviour, gauging the intent behind the behaviour is more art than science. Nothing in the analysis performed by Twitter Audit indicated that the accounts could be attributed to a political opponent, and the accounts labelled as “fake” could have just as easily been supportive of Zille as antagonistic. A fake account, identified based on the metrics assessed by Twitter Audit, could just as easily be orchestrated by either politician, or neither.

To engage in targeted harassment and trolling on Twitter also does not require the harassing account to follow their intended target. As Twitter is an open platform, anyone can access an account’s timeline or comment on their tweets, and co-ordinated attacks could just as easily be launched without first following their target.

Considering Twitter Audit’s sampling issues for accounts with large follower numbers and the opaque methodologies it employs, social media users, including politicians, would do well to use such tools with significant circumspection. Indeed, the only true way to identify the authenticity of an account or whether it is automated is with access to the operator. Instead, open-source researchers use a combination of tools and frameworks such as the DFRLab’s 12 ways to spot a bot to build to a high-confidence assessment of inauthentic behaviour on Twitter; the more tools or frameworks used, the stronger the assessment.

Suffice to say, if Helen Zille’s goal was to purge her Twitter followers of people who disliked or harassed her on the platform, her approach was not conducive to actually achieving the goal. As Zille has shown, relying on a single platform to assess behaviour can lead to false positives and botched conclusions, especially when that platform is not designed for the goal at hand. DM

Jean le Roux is a research associate, southern Africa, with the Digital Forensic Research Lab. This article was first published on medium.com and minor edits have been made to adapt it to Daily Maverick’s editorial style.

Become an Insider

Become an Insider